Building a Home HPC Computer Cluster (Beowulf?!) Using Ubuntu 14.04, old PC's and Lots of Googling

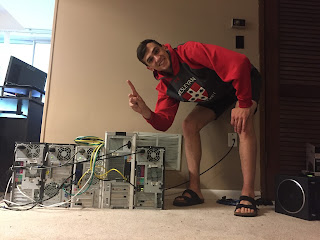

Building a Home Computer Cluster!

This post is intended for all the linux/computer noobs out there, like myself, who want to build their own computer cluster at home, but are easily daunted by all the computer experts online who throw big words around and aren't interested in people who just want to fiddle around and not become full experts themselves.That said, I would not have been able to complete my project had there not been extensive resources online provided by people who in fact do care about the noobs. The issue is that these resources are scattered far and wide across the interweb, and this post is meant as a way to pull all of them together. I in no way am claiming to have come up with the ideas that are detailed below, and have made every effort to link to whatever expert I am citing.

What knowledge I am assuming:

- A basic familiarity with the existence of terminal, and being able to type commands into it.

- If you aren't familiar, terminal exists, its an application, and you can type things into it.

- A basic familiarity with a text editor, preferrably vi and/or emacs.

What I started off with:

2: Dell Dimension 30001: Dell Dimension 9200

1: Compaq Presario

1: Dell Inspiron 530

These were PCs generously donated by people in my community who no longer had need of them. If you are interested in undertaking a project like this but are dissuaded by the costs, consider e-mailing a list-serve in your community or putting up flyers asking people to donate their computers. You'd be amazed by the number of people who hoard their old towers in their basements, afraid to throw them away because of "security risks." They couldn't be happier to give them away to someone who will get it off their hands and wipe their hard drives.

What you will need:

- X: Ethernet cables, where X=(#computers you have)+1. In my case X=6. You probably have a bunch lying around the house. I did, but not 6, so I bought some here.

- 1: Ethernet switch/hub. These are crazy cheap on amazon (or anywhere). I bought this one that works fine.

- 1: PCI ethernet card, to be explained later. One that should be fine can be found here.

- A router/modem (provided by Internet Service Provider (ISP)) you can plug one of your computers into via ethernet. To be honest, I don't now what the difference is between a router and a modem, and I'm totally fine with that. I plugged my master node (explained below) into what my mom claims is a "router" but don't take my word for it.

First things first: Operating System (OS)

You need to choose an OS for your computers to run! I suggest Ubuntu 14.04. The reason I chose this is honestly because I found this fantastic video tutorial online for building a computer cluster, which you can find here, where the author (Po Chen) used this operating system. You really should watch this video, start to finish, if you plan on undertaking this project. In fact one of my sections below is asking you to watch this video. I love it so much that I'm intending this post as a way to sort of "surround" his video. He assumes you already have a working Local Area Network (LAN) (what your interconnected computers are forming), and he sort of leaves you hanging when it comes to actually finishing up the setup of your High Performance Computer (HPC). However the meat of this project is courtesy of Po Chen.Once you've decided, you need to install this OS on all your computers. Now this is not as simple as downloading it from the internet and clicking on it, for some reason. You have to download the file (huge file) onto a USB disk, and then have your PCs boot from this USB disk. Ubuntu however has great documentation for doing this, which you can find here. You should connect an ethernet cord from your router to the computer you are installing Ubuntu on to give it internet, as that makes the installation easier (I'm not sure if it needs internet, but it says during the installation that things go smoother if it is connected and who am I to question the Ubuntu gods).

You should have the same username and password on all your computers.

Physical Architecture of Your Setup

Once this is done, you will need to physically connect all of your computers. Now this is where I was woefully confused for a while, as someone with little to no experience with computer architecture. There were ethernet cables everywhere, I had two different ethernet hubs at one point, it was ugly. There are surprisingly few resources for people who are total noobs trying to do something like this.What you first want to do is decide which computer is to be your "master node." This should generally be the newest/most powerful (most-aesthetically-pleasing?) computer out of the bunch you've assembled.

Now this is the one place where your hands will have to get dirty and you will perform some computer surgery. Generally, the computers you get your hands on will only have a single ethernet port. However, for your cluster, you're going to want the master node to be able to connect to the internet as well as to the other nodes (they're called slave nodes, lol). Two connections, two ports. Apparently there are ways to accomplish this with one port, but as a noob, this was way beyond my capabilities. Therefore you're going to need to install the aforementioned PCI ethernet card into your master node. This is a lot easier than it sounds. All you have to figure out is how to open up your computer, slide off one of those thin metal plates that protect your computer from dust, pop the PCI card in, and you're done (after closing the computer, of course).

Here is a shot of my master node with the PCI card installed. Note that the internet connection (eth1 (explained beow)) is the white ethernet cord, connected to the PCI card, and the LAN connection is the yellow ethernet cord.

Now we get to how you're going to want to connect the computers. The way that worked for me was the following architecture:

Where each line is an ethernet cable, the blue box is the ethernet hub/switch, and the white box is the router. I found that the internet connection for the master node wanted to be run through the PCI card, and the LAN ethernet connection wanted to be run through the OG ethernet port, for reasons unknown.

This architecture thing is really the reason why I'm writing this. For the later stuff, about the software to install and how to set up all that, there are a ton of resources. But with respect to setting up a functioning LAN where all of the nodes can connect to the internet themselves through the master node, there are surprisingly few resources. Po Chen's video is great, if you have a LAN where your slave nodes (in his case, Gauss) can connect to the internet. The majority of my time on this project was spent tearing my hair out about how to get my slave nodes to connect to the godforsaken internet.

Connecting Everyone to the Internet

I spent a lot of time fuksing with the Network Manager Graphical User Interface (GUI), trying to get everything to work there. For the master node, this is fine, which I will explain now. But for the slave nodes, while I'm sure getting it to work there is possible, I couldn't figure it out. I will get to that after the master node.The Master Node

On the master node, what worked for me was leaving the file /etc/network/interfaces untouched (we will fuks with this file for the slave nodes), and instead using the Network Connections GUI (accessible through the top right corner of your home screen, clicking on the little internet symbol and then clicking "Edit Connections..."). You want to create two new ethernet connections, one for the LAN and one to connect to the internet.First for the Ethernet: click add, make sure the dropdown box has "Ethernet," and then click "Create...". For the connection name, I called mine "Ethernet Connection 1." Under the "General" tab, make sure the first two boxes are checked:"Automatically connect to this network..." and "All users..." Under the "Ethernet" tab, for the Device MAC address, on the dropdown list click the one that ends in (eth1). Under "IPv4 Settings", make sure Method is set to "Automatic (DHCP)." Then click save.

Then for the LAN: Everything is the same (I called this connection "intranet") as above except for Device MAC address, choose eth0, and under "IPv4 Settings," you want Method set to Manual. Then (still in IPv4 Settings tab) on the right hand side, click Add. Under address, put the IP address you want for your master node. I chose 10.0.0.5 for mine, for no apparent reason. There are three ranges of IP addresses that are designated as "private," and the 10.0.0.0-10.255.255.255 range is one of these. You can choose another range, but I doubt it matters. Under Netmask, put 255.255.255.0 (I don't really understand Netmask, but for all intents and purposes setting it to 255.255.255.0 always works) and leave Gateway blank. Then click save.

Now on the master node, we have to allow internet traffic to flow, both ways, through it. This is done by submitting iptables commands, all of which (like everything else) I found online. The best resource I think is this Ubuntu Community Help Wiki, which I'm linking to here. I tried to use the "Shared to other computers" method, but it didn't work for me. Instead, scroll down to the section titled "Ubuntu Internet Gateway Method (iptables)." Note that eth1 and eth0 have been swapped in my example versus their's, and that we are using different ranges of IP addresses. I will summarize as follows.

We have already configured what our static IP address is for the LAN in Network Connections, so no need to submit the "sudo ip addr add..." command (like they have you do in the Ubuntu wiki) (sudo is a command telling the computer that you def have the authority to do what you're telling it to do). However we will want to submit the commands:

sudo iptables -A FORWARD -o eth1 -i eth0 -s 10.0.0.0/24 -m conntrack --ctstate NEW -j ACCEPT

sudo iptables -A FORWARD -m conntrack --ctstate ESTABLISHED,RELATED -j ACCEPT

sudo iptables -t nat -F POSTROUTING

sudo iptables -t nat -A POSTROUTING -o eth1 -j MASQUERADE

Note again that eth1 and eth0 have been swapped (relative to the wiki example), and the IP subnet has been replaced with the one relevant to our example.Now we want to save the iptables:

sudo iptables-save | sudo tee /etc/iptables.sav

Now we want to edit /etc/rc.local and insert the following line before "exit 0":iptables-restore < /etc/iptables.sav

Then to allow IP forwarding, we submit the command:sudo sh -c "echo 1 > /proc/sys/net/ipv4/ip_forward"

Then finally, we want to edit /etc/sysctl.conf and uncomment the line:#net.ipv4.ip_forward=1

The Slave Nodes

Now for the slave nodes: For each of them, I went and deleted all of the Network Connections under "Edit Connections..." Then, I proceeded to edit the /etc/network/interfaces file.You will want to edit the file to read

auto lo iface lo inet loop back auto eth0 iface etho inet static address 10.0.0.x netmask 255.255.255.0 gateway 10.0.0.5 dns-nameservers 8.8.8.8Where x is a number of your choice, the IP address of your slave node. 10.0.0.5 is the IP address of your master node (as specified above). You are basically telling the computer to use the master node as your "gateway" to the internet. I don't understand what DNS is, and don't plan on learning, which is probably why figuring this out took so long. All I know is that it is like an internet "phonebook," and is annoying, and 8.8.8.8 is a free DNS server associated with google. Also Netmask is an opaque thing I don't get (see above). This setup worked for me, and I don't question these sorts of things.

Now, picking up with the Ubuntu wiki example, we want to disable networking. I had an incredibly hard time doing this, as the command on the wiki didn't work for me, neither did many examples I found on the internet. What did work was the command:

sudo ifdown eth0

which should shut down networking (this at times also hasn't worked for me, but you needn't worry. The deleting of all our Network Connections info should have done the trick). We're doing this so that we can restart networking with the right settings. Now, again, no need for the "sudo ip addr add..." command as we specified our IP address above.Now however we do want to submit the command:

sudo ip route add default via 10.0.0.5

where again, 10.0.0.5 is the internal (eth0) IP address of our master node, which is also connected to the internet (via eth1). You do not have to do the next section involving DNS servers, as we have already specified that we should be using Google's DNS servers.Then we submit into terminal

sudo ifup eth0

to restart networking. Hopefully, this should give you internet access on your slave nodes, which will be very useful for installing software on them, as you won't have to manually connect your internet ethernet cable to each slave node individually. You can check this by typing into terminalping google.com

You should see output telling you that it got like 64 bytes from google in like 10 ms. If you don't, time to troubleshoot!Now that you have your LAN set up, I'll leave you in Po Chen's good graces.

I'll wait.

PO CHEN

PLZ GO SEE PO CHEN'S VIDEO. WE CANNOT FINISH W/O YOU WATCHING PO CHEN.POST-PO CHEN (Slurm/Job scheduling)

Great! You've watched Po Chen's video, and so you've successfully installed nfs, ssh, and such on all of your nodes. Note that had we not set up our LAN so that the slave nodes had internet, you would have had to give internet manually to each of your nodes, connect a monitor, mouse, keyboard to each of them, individually, to complete Po Chen's steps. It would not have been possible to ssh into Gauss, in Po Chen's example, and download for example nfs-client. I didn't figure out the slave node internet thing until the very end of my process, and it was a disaster.You have a functioning computer cluster! Now you're going to want a way to submit jobs to your cluster, assuming the purpose of building this computer cluster was for you to have your own HPC cluster. There are lots of good open source schedulers/workload-managers out there, but I chose slurm, simply because I'd used it on a HPC cluster before (one associated with a University). You'll want to download it onto your master node and all of your slave nodes (FYI, I loosely followed this blog for this section).You'll submit the command:

sudo apt-get install slurm-llnl

You'll also need to install munge (for authentication stuff) and ntp (for time synchronization):sudo apt-get install munge sudo apt-get install ntpTo synchronize the time, the recommended thing to do is set your time to UTC so you don't have to worry about daylight savings or anything:

sudo timedatectl set-timezone UTC

For Munge, in the master node you'll run the command:create-munge-key

Now the munge.key file that this command creates is a real bastard, as it is really hard to move the file around and generally play with it. What did not work for me was sending it to the /nfs directory Po had you install. Instead, I used scp to send it over to the home directory of each slave node:

sudo scp -r /etc/munge/munge.key danny@danny1:~/

Where danny is the user on the slave node danny1. And then, after having installed munge on your slave node, submit the commands:sudo mv munge.key /etc/munge/ sudo chown munge /etc/munge/munge.keyNote that you do not want to run create-munge-key in any of your other nodes. This will create a different munge key, and you want all of your nodes to have the same file. The second command is to change the owner of the munge.key file to munge. That is important, for some reason.

Then on each node, you'll need to start munge:

sudo /etc/init.d/munge start

Now you'll need to configure slurm. This can be done by visiting this website, which is very self-explanatory. However, some comments:- Obviously, I didn't set my cluster up the usual way where all of the computers were the same, with the same configurations. In fact, some of them were so old (Dell Dimension 3000) that they only had a single core (only 1 CPU, as opposed to the usual 2). Therefore, I was confused as to what I should put for CPUs under the Compute Machines heading. I left it as 1 for now, and fixed the problem later as I will explain.

- I found that ReturnToService should be set to 2.

- Other than that, everything else for me was fine as default (obviously you should change the IP address of your master node to whatever you chose, etc.). Download the file onto your master node.

Now, to fix the problem of differing machines. I found this example slurm.conf file online which was helpful. My takeaway was that you want these lines commented out in the slurm.conf file:

NodeName=linux[1-32] Procs=1 State=UNKNOWN

PartitionName=debug Nodes=linux[1-32] Default=YES MaxTime=INFINITE State=UP

NodeName=localhost Procs=1 State=UNKNOWN

PartitionName=debug Nodes=localhost Default=YES MaxTime=INFINITE State=UP

And instead have, for example, for my node danny1 which had 1 processor (CPU):

NodeName=danny1 NodeAddr=10.0.0.1 Procs=1 State=UNKNOWN

and so on for the other nodes. You can see how many processors your machine has by typing the command into terminal

slurmd -C

Then my final line hadPartitionName=debug Nodes=danny[1-5] Default=YES MaxTime=INFINITE State=UP

Now, copy this file to all nodes (you can just put it in the /nfs directory), and place it in the directory /etc/slurm-llnl/.Now you can start slurm (on every node) by typing

sudo /etc/init.d/slurm-llnl start

Your cluster and job scheduler are now fully functional! To submit jobs, create a new directory in /nfs with a test job, and a batch script in it, which I call script.sh. A sample batch script is #!/bin/bash -l #SBATCH --job-name=MYFIRSTJOBWOOOOO #SBATCH --time=0-01:00 #SBATCH --partition=debug #SBATCH --nodes=1 #SBATCH --ntasks-per-node=1 #SBATCH --cpus-per-task=1 #SBATCH --mem-per-cpu=100 #SBATCH -o stdout #SBATCH -e stderr #SBATCH --mail-type=END #SBATCH --mail-user= UREMAIL@HERE.COM gfortran -O3 test.f ./a.outWhich you submit by typing

sbatch script.sh

Where test.f is your test program. You can check on the progress (whether or not it has started, how long its been running, etc.) of your job by typing squeues into the command line, and see if your nodes are online by typing sinfo. There is lots of helpful slurm documentation online, which can be found here.Accessing Your Cluster From Afar

I'm going to be leaving home soon for grad school, and so would like to be able to ssh into my cluster. This is much easier than it sounds. From your master node, type into google "What's my IP address." Write down this number. For arguments sake let's say it's 100.1.1.1. Now type this number into your favorite web browser, which should take you to your router settings page, set up by your ISP. The username and password should be printed on your modem. I have Verizon, and set it up by clicking on the Firewall icon, then clicking on Port Forwarding on the left hand sidebar. You'll want to specify the IP of your master node, and then under Application To Forward click on Custom Ports. For ssh you want TCP, and the usual port for ssh is 22. Click add.Now go somewhere else (outside of your home network), and see if you can ssh into your cluster. This can be done by typing into terminal

ssh danny@100.1.1.1

where danny is your username. Make sure you have a strong password! Apparently some buttfaces on the internet scan around for open ssh ports, and then try and blast them open via brute-force.If you've made it to this point, thank you, because you're the one. Congrats on your newly functioning HPC cluster!

Comments

Post a Comment